Autonomous cars are coming for you soon, and they will be talking. While Google cars already display your personal initials in robo-taxi mode, they lack an advance dialogue to communicate with humans outside the vehicle. That will change. There is a new literacy about to spring forth in Google’s fleet. It’s called a “tertiary language.”

“Tertiary language” is not a new idea. Historically the term referred to the acquisition of a third dialogue by multilingual speakers (and gifted learners) who had acquired two other languages- say a Japanese speaker who was also proficient in English. Today, the idea is extending to cars.

Tertiary Language:

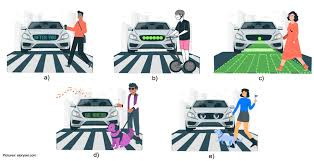

In car talk “Tertiary language” refers to the signaling built into a driverless vehicle so that humans, whether on foot or in another vehicle, can predict the vehicle’s intentions. “Intentions” is a clumsy way of communicating what the vehicle intends to do next, say to slow down or stop. For the time being, the tertiary language will be one-way, from car to human.

There’s a good reason for cars to ‘talk’: We are accustomed as drivers and pedestrians to rely on two-way communication for safety. This is needed when there’s uncertainty or a potential blind spot. At an intersection a driver, a pedestrian or a bicyclist signals to others with a raised hand, an upward glance, or eye-contact. These gestures indicate an awareness of each other, and a protocol for working out who has the right of way. Often similar communications take place between drivers: if a vehicle is at a four-way stop, drivers use hand signals or the horn, to clarify what goes next. As goes human nature, these gestures and noises can become flip or offensive.

For the past five years, probably longer, there has been study of how to implement vehicle- to- human speak. The Rand corporation, a think tank/defense consultancy, developed a complex set of guidelines to help cars, pedestrians and bicyclists dialogue.

In the Dome:

Now Waymo, the autonomous driving division of Google, is introducing a tertiary language to its vehicles. It will start with the LED dome, which sits on the roof of the vehicle. An LED resembles a modern-day periscope. Pedestrians in front of the vehicle will see shifting gray and white rectangles that indicate that the vehicle plans to yield to them. Drivers behind the vehicle, will see a yellow pedestrian symbol informing them that there’s a pedestrian crossing. These messages will join chimes, and other alerts used today.

For the time being, this “tertiary language” is a one-way language. In the future, pedestrians and bicyclists might carry their own version of a LED or use an app on their phone to signal their presence. Although these devices are not on the market yet, they might gain acceptability as a way to control the spiraling rate of pedestrian and bicycle injuries and deaths.

Roadblocks:

Meanwhile, the one-way tertiary language of Waymo vehicles faces two roadblocks . First, Google needs to standardize the “tertiary language.” It would be a virtual Babylon if Cruze, a competing vehicle company, favored an alternative set of colors, say “blue” to signal “yield.” Likewise, humans need to become conversant with the tertiary language and learn to recognize the color patterns.

The other roadblock is getting humans to respect this tertiary language. While it sounds easy, people still choose to crash through the flashing red signals at a railroad crossing or plow through work zones that are set up with cones. Operations of the Cruise autonomous vehicle in San Francisco were suspended when the human employees chose to surpress full video coverage of a collision. It’s human nature to sometimes mislead or outwit machines. The intricacies of human communication are more than tertiary.